Will LLMs Compete for Dominance — Just Like Search Engines Did?

LLMs, Search Wars, And The Rise Of Generative Engine Optimization

For more than two decades, one habit defined how people navigated the internet: “Just Google it.” That phrase didn’t emerge overnight. It was the result of years of competition, confusion, and consolidation during the early days of search.

Today, we’re standing at the beginning of a remarkably similar moment — but instead of search engines competing for clicks, large language models (LLMs) are competing to become the default way people ask questions, discover information, and make decisions.

The battle for AI dominance may feel new, but its shape is familiar.

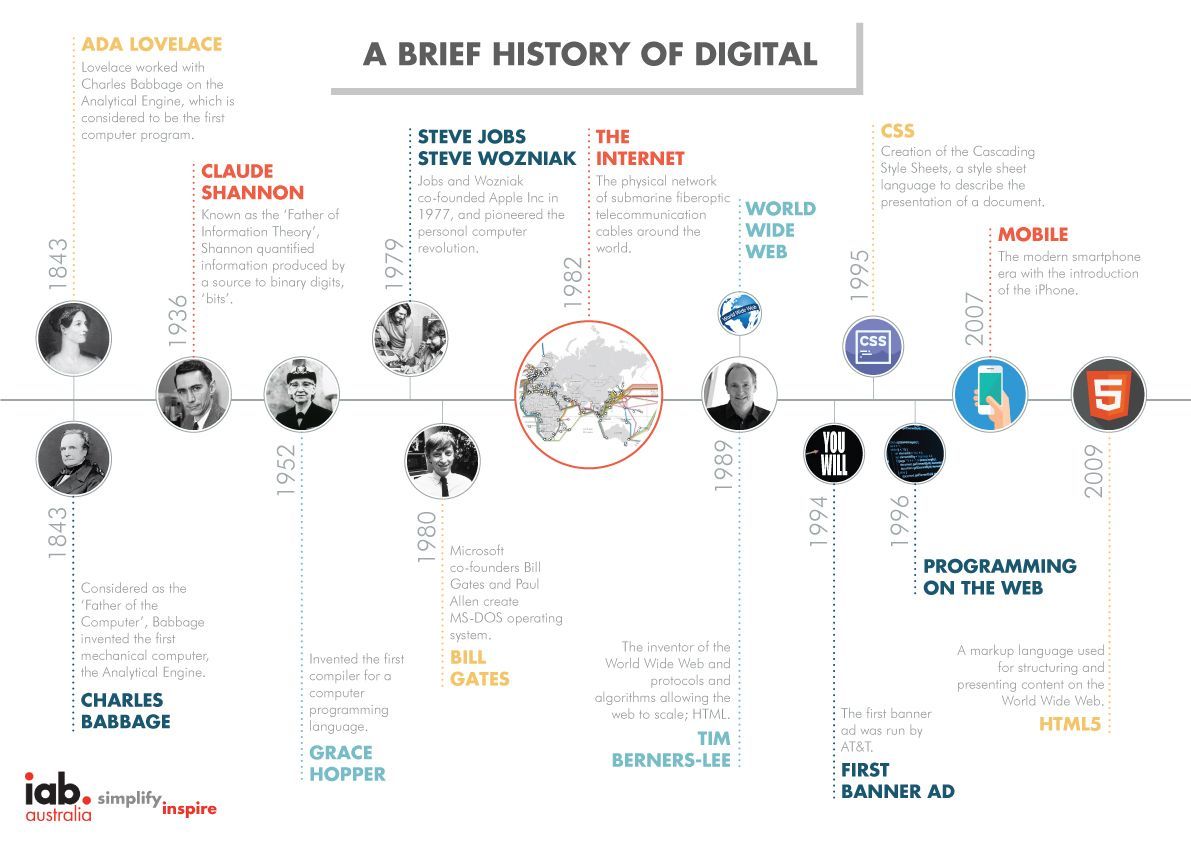

The Early Search Wars: Choice Before Clarity

In the late 1990s and early 2000s, users didn’t instinctively know where to search. Yahoo, AltaVista, Ask Jeeves, Lycos, Excite, and others all promised better ways to navigate the web. Browsers and portals competed to become the starting point for the internet, and users experimented freely.

Google ultimately rose to dominance not because it was the only option, but because it consistently delivered simpler experiences and more relevant results. Over time, user trust consolidated around a single habit. Search went from fragmented to centralized.

That consolidation shaped everything that followed — from SEO to digital advertising — because discoverability depended on one primary gatekeeper.

LLMs Are Replaying the Same Competitive Pattern

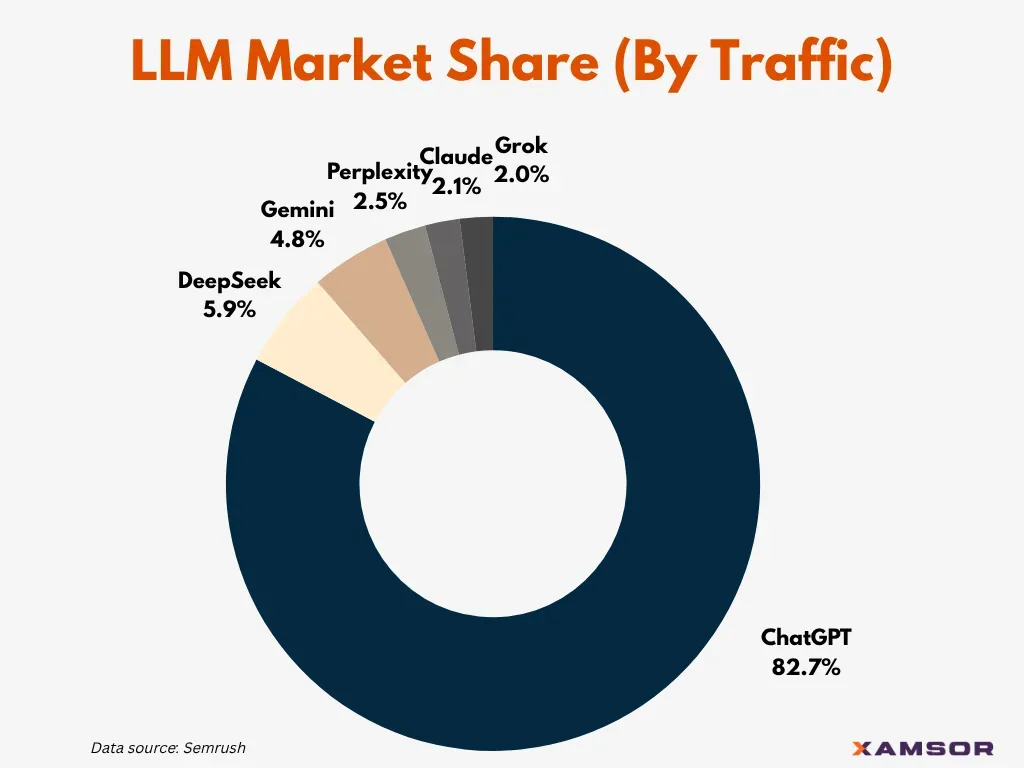

Today’s AI landscape feels strikingly similar to that early search era. ChatGPT, Google Gemini, Microsoft Copilot, Perplexity, Claude, and others are all competing to answer the same question:

Which AI should users go to first?

But unlike traditional search engines, LLMs don’t return ranked lists of links. They generate direct answers, summaries, and explanations — often without clearly showing how those answers were formed.

This changes user behavior entirely:

- People compare answers across models

- Different tools give different responses to the same prompt

- Users begin choosing models based on tone, speed, or familiarity — not just accuracy

Instead of one obvious winner, the ecosystem feels fragmented and experimental.

Trust, Accuracy, and the New Confusion Problem

That fragmentation creates a new challenge: trust.

A recent BBC analysis of AI assistants found that many generated answers to news-related questions contained factual errors, misattributions, or altered context. In some cases, AI systems confidently presented incorrect or misleading information.

This matters because LLMs don’t just point users to sources — they replace the act of verification. When answers are wrong, users may not realize it.

As more people rely on AI for search-like behavior, consumers face a growing question:

Which AI should I trust for this answer?

This uncertainty mirrors the early search era — but with higher stakes, because AI-generated answers feel authoritative by default.

A More Fragmented Future Than Search Ever Was

While Google eventually became the default search engine, the LLM era may not consolidate as cleanly.

Different models excel at different tasks:

- Some prioritize conversational depth

- Others emphasize citations or real-time data

- Some are embedded into operating systems or browsers

Rather than one dominant tool, users may adopt multiple AI entry points, depending on context. The result is a permanently fragmented discovery environment — one where no single model controls visibility.

And that fragmentation changes everything for brands, publishers, and creators.

From SEO to Generative Engine Optimization (GEO)

In the search era, visibility depended on Search Engine Optimization (SEO): keywords, backlinks, rankings, and clicks. But LLMs don’t work that way.

They don’t rank pages — they synthesize information. They choose what to include, what to omit, and how to phrase answers based on how well content is understood, trusted, and structured for generative systems.

This shift gives rise to Generative Engine Optimization (GEO).

GEO focuses on:

- Structuring content so AI models can interpret it accurately

- Reinforcing authority, clarity, and contextual signals

- Ensuring brands and ideas are represented correctly inside AI-generated answers

In a world where users no longer click links, being visible means being referenced — not ranked.

Where GOSH Fits in the Generative Era

As LLM competition intensifies, platforms like GOSH are emerging to help organizations navigate this new reality.

GOSH is built for a generative-first world — one where discoverability depends on how AI systems understand and reproduce information, not just how search engines index it.

By focusing on Generative Engine Optimization, GOSH helps brands, founders, and publishers:

- Understand how their content appears across different LLMs

- Identify gaps, inaccuracies, or omissions in AI-generated answers

- Structure authoritative signals so generative engines can reliably surface their expertise

In short, GOSH addresses a problem that didn’t exist in the early search era: how to stay visible and accurate when answers are generated, not retrieved.

Why This Matters Now

As LLMs increasingly replace traditional search behaviors, discoverability is being redefined in real time.

Users may never settle on a single AI the way they settled on Google. Instead, they’ll move fluidly between tools — trusting whichever one feels right in the moment.

For businesses and creators, this means:

- Visibility is no longer owned by one platform

- Accuracy and authority must be machine-readable

- And optimization must adapt to generative systems, not just search indexes

A Familiar Fight With New Rules

The competition among LLMs mirrors the early days of search — a crowded field, shifting user loyalty, and a race to become the default interface for information.

But unlike the search wars, this battle may not end with a single winner.

In a world where AI generates answers instead of listing links, Generative Engine Optimization becomes the new foundation of discoverability. Platforms like GOSH exist to help organizations stay visible, accurate, and trusted across a fragmented AI landscape.

The future may not belong to one dominant AI.

It will belong to those who understand how generative engines decide what gets said — and who gets left out.